[fusion_builder_container hundred_percent=”no” hundred_percent_height=”no” hundred_percent_height_scroll=”no” hundred_percent_height_center_content=”yes” equal_height_columns=”no” menu_anchor=”” hide_on_mobile=”small-visibility,medium-visibility,large-visibility” status=”published” publish_date=”” class=”” id=”” border_size=”” border_color=”” border_style=”solid” margin_top=”” margin_bottom=”” padding_top=”” padding_right=”” padding_bottom=”” padding_left=”” gradient_start_color=”” gradient_end_color=”” gradient_start_position=”0″ gradient_end_position=”100″ gradient_type=”linear” radial_direction=”center” linear_angle=”180″ background_color=”” background_image=”” background_position=”center center” background_repeat=”no-repeat” fade=”no” background_parallax=”none” enable_mobile=”no” parallax_speed=”0.3″ background_blend_mode=”none” video_mp4=”” video_webm=”” video_ogv=”” video_url=”” video_aspect_ratio=”16:9″ video_loop=”yes” video_mute=”yes” video_preview_image=”” filter_hue=”0″ filter_saturation=”100″ filter_brightness=”100″ filter_contrast=”100″ filter_invert=”0″ filter_sepia=”0″ filter_opacity=”100″ filter_blur=”0″ filter_hue_hover=”0″ filter_saturation_hover=”100″ filter_brightness_hover=”100″ filter_contrast_hover=”100″ filter_invert_hover=”0″ filter_sepia_hover=”0″ filter_opacity_hover=”100″ filter_blur_hover=”0″][fusion_builder_row][fusion_builder_column type=”1_1″ layout=”1_1″ spacing=”” center_content=”no” link=”” target=”_self” min_height=”” hide_on_mobile=”small-visibility,medium-visibility,large-visibility” class=”” id=”” hover_type=”none” border_size=”0″ border_color=”” border_style=”solid” border_position=”all” border_radius=”” box_shadow=”no” dimension_box_shadow=”” box_shadow_blur=”0″ box_shadow_spread=”0″ box_shadow_color=”” box_shadow_style=”” padding_top=”” padding_right=”” padding_bottom=”” padding_left=”” margin_top=”” margin_bottom=”” background_type=”single” gradient_start_color=”” gradient_end_color=”” gradient_start_position=”0″ gradient_end_position=”100″ gradient_type=”linear” radial_direction=”center” linear_angle=”180″ background_color=”” background_image=”” background_image_id=”” background_position=”left top” background_repeat=”no-repeat” background_blend_mode=”none” animation_type=”” animation_direction=”left” animation_speed=”0.3″ animation_offset=”” filter_type=”regular” filter_hue=”0″ filter_saturation=”100″ filter_brightness=”100″ filter_contrast=”100″ filter_invert=”0″ filter_sepia=”0″ filter_opacity=”100″ filter_blur=”0″ filter_hue_hover=”0″ filter_saturation_hover=”100″ filter_brightness_hover=”100″ filter_contrast_hover=”100″ filter_invert_hover=”0″ filter_sepia_hover=”0″ filter_opacity_hover=”100″ filter_blur_hover=”0″ last=”no”][fusion_text columns=”” column_min_width=”” column_spacing=”” rule_style=”default” rule_size=”” rule_color=”” hide_on_mobile=”small-visibility,medium-visibility,large-visibility” class=”” id=”” animation_type=”” animation_direction=”left” animation_speed=”0.3″ animation_offset=””]

Precision vs. Accuracy

Obtaining reliable measurements from 3D scanning is not as simple as it may appear. There are several factors at play in this aspect, including the 3D scanner’s precision and accuracy capabilities. In this article, we’ll discuss how the two terms differ, what can alter them, and when and how you should correct them when they are not up to par.

What is the Difference Between Precision & Accuracy?

Precision and accuracy are two metrology terms that are often confused. This is because they both refer to the error of recorded measurements. Understanding the difference between the two is a crucial step to obtaining the desired data from a 3D scan of your part.

The term of precision is used to describe how close a set of measurements taken by the same scanner and operator is in proximity to each other. If the measurements are grouped around the same number, the results are considered to be precise. Essentially, this means that the measurement results are repeatable.

The term of precision is used to describe how close a set of measurements taken by the same scanner and operator is in proximity to each other. If the measurements are grouped around the same number, the results are considered to be precise. Essentially, this means that the measurement results are repeatable.

As for accuracy, this word is used to refer to a measurement’s proximity to the true or accepted value being measured. For example, if you were scanning a 1 inch block, the closer your scanner’s measurement is to 1.00”, the more accurate it would be considered.

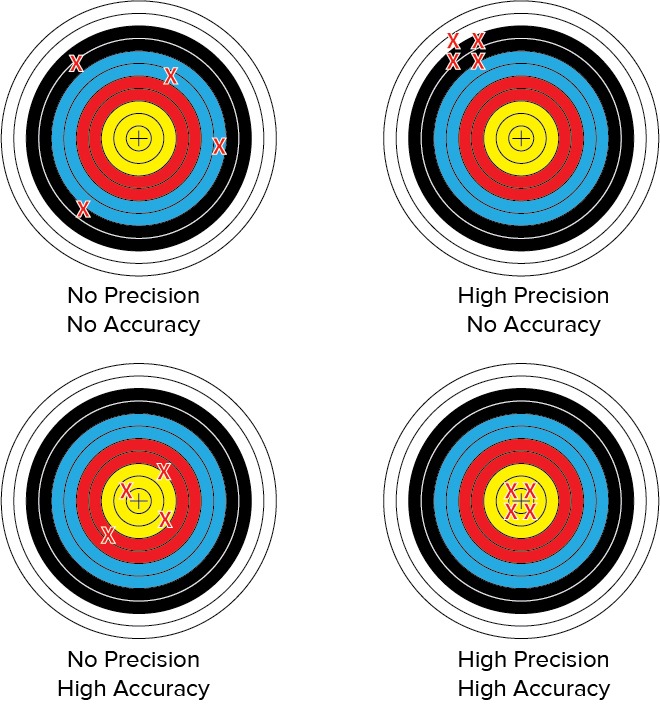

It is important to note that while precision and accuracy are closely related terms, they are independent of each other. This can best be explained through the classic example of darts on a dartboard where the bulls-eye/center is the true value:

- No Precision, No Accuracy: The darts are scattered around the board and are neither close to each other, nor close to the center of the target.

- High Precision, No Accuracy: The darts are grouped together in one area of the board, but they are not close to the center of the target.

- No Precision, High Accuracy: The darts are spaced around the center of the target so that the average of their distances is close to the bulls-eye.

- High Precision, High Accuracy: The darts are grouped together in the center of the board.

Precision and accuracy are not standardized. Whether or not measurements are considered to be precise or accurate for your particular project is up to your discretion. Depending on your part and the required tolerances, you may decide that a larger or smaller margin of error is acceptable. However, an even balance between both precision and accuracy will achieve the best, or most reliable, results. Without both, it can be difficult and risky to make design or production decisions based on a scan. By knowing your equipment is both precise and accurate, you can trust that you are receiving superb reliable results.

What Affects Precision & Accuracy?

Even though a 3D scanner can have superb precision and accuracy capabilities, it may not always produce measurements that reflect this potential. This is because several factors can affect the scanner’s precision and accuracy and degrade the quality of the scan.

can affect the scanner’s precision and accuracy and degrade the quality of the scan.

The primary factors that can alter precision and accuracy are as follows:

- Too bright ambient light

- Movement of the scanner (picking up and relocating; base shaking during scanning)

- Dirt on the part to be scanned (excess grease, dust, etc.)

- Change in temperature and/or humidity

When Should You Check Precision & Accuracy?

Regardless of how careful you are with your scanner, calibration decay is inevitable and will occur over time as a result of some of the above factors. As such, it is a good idea to frequently check the calibration of your scanner.

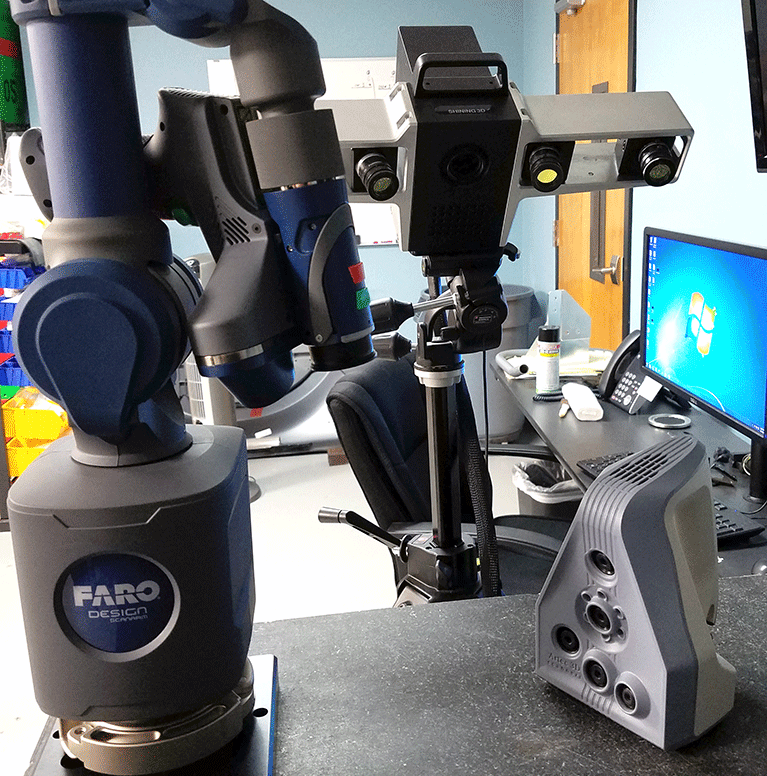

How often a calibration should be done greatly depends on what type of 3D scanner you are using. For example, at 3 Space, our blue light 3D scanner is mobile and thus requires frequent calibration since its location often changes from job to job. On the other hand, our Faro laser 3D scanner is hard-mounted in a fixed position where vibrations or other movements will not  shift it. Since its location is fixed, it does not require calibration as frequently. Ultimately, the frequency with which a 3D scanner should be calibrated will depend on various factors including ambient temperature, object size, quality required, frequency of use, and more.

shift it. Since its location is fixed, it does not require calibration as frequently. Ultimately, the frequency with which a 3D scanner should be calibrated will depend on various factors including ambient temperature, object size, quality required, frequency of use, and more.

Apart from regular calibrations, there are sometimes visual cues within a scan that may indicate the scanner needs a calibration. One of these cues is ripples or waves present in the scan. Another is misalignment between scans taken from different angles. If either of these errors occurs in your scan, it is best to recalibrate the scanner before taking further measurements. Keep in mind that noise is common in 3D scans and does not necessarily indicate a need for calibration.

How Are Precision & Accuracy Fixed?

As previously mentioned, calibrations are necessary for the upkeep of a 3D scanner’s precision and accuracy. Specifically, calibration is part of the scanner’s software, and it works by comparing the scanner’s measurements of an object to the known values of that same object. By determining how far off the scanned measurements are from the true measurements, the  software may make the necessary adjustments to bring the precision and accuracy back into alignment. Different objects can be used as long as you know the true measurement of them for comparison. The most common items used are calibration planes from calibration kits, gauge blocks, or calipers.

software may make the necessary adjustments to bring the precision and accuracy back into alignment. Different objects can be used as long as you know the true measurement of them for comparison. The most common items used are calibration planes from calibration kits, gauge blocks, or calipers.

Calibration should only be conducted after the scanner has warmed up (been turned on for about 15-20 minutes) or else the calibration will likely be wrong due to temperature difference.

3D Scanning at 3 Space

Here at 3 Space, we offer multiple 3D scanning services, including white light, blue light, laser light, and touch probe. Our team of expert engineers regularly calibrates each technology to ensure that every scan is the best it can be. For more information about 3D scanning or to get a quote, contact us today.

[/fusion_text][/fusion_builder_column][/fusion_builder_row][/fusion_builder_container]